背景:

在使用k8s sa权限变更时,由于k8s 中rolebinding无法直接修改roleRef,所以需要delete && create的方式来变相修改:

kubectl get rolebinding -n ns-p0821shr rb-4yk46fc8 -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

annotations:

user.sealos.io/owner: 4yk46fc8

creationTimestamp: "2024-10-17T08:45:50Z"

labels:

user.sealos.io/owner: 4yk46fc8

name: rb-4yk46fc8

namespace: ns-p0821shr

ownerReferences:

- apiVersion: user.sealos.io/v1

blockOwnerDeletion: true

controller: true

kind: User

name: 4yk46fc8

uid: ed012dd7-a20f-49ff-8be2-ac1b6d00cbbc

resourceVersion: "211693825"

uid: bb09bc20-2144-4bfb-bc1e-bfe479864c63

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: Manager

subjects:

- kind: ServiceAccount

name: 4yk46fc8

namespace: user-systemkubectl get role -n ns-p0821shr

NAME CREATED AT

Developer 2024-10-17T03:20:34Z

Manager 2024-10-17T03:20:34Z

Owner 2024-10-17T03:20:34Z如上述想要将rolebinding 绑定的role由Manager修改为Developer,kubectl edit是无法直接修改该字段的

# rolebindings.rbac.authorization.k8s.io "rb-4yk46fc8" was not valid:

# * roleRef: Invalid value: rbac.RoleRef{APIGroup:"rbac.authorization.k8s.io", Kind:"Role", Name:"Developer"}: cannot change roleRef所以这里我们采用了先删除该rolebinding 在创建的方式,由于所有的操作都是通过后台golang后端调用k8s 接口操作的,具体代码如下:

if err := r.Delete(ctx, rolebinding); client.IgnoreNotFound(err) != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to delete rolebinding", "Failed to delete rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}

if _, err := ctrl.CreateOrUpdate(ctx, r.Client, rolebinding, setUpOwnerReferenceFc); err != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to create/update rolebinding", "Failed to create rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}实现时看起来一点问题都没有,并且单次测试时也没有问题,直到频发权限更改问题时发现概率性出现权限没有添加成功的问题,且从日志来看并没有报错。排查代码定位只有这里会操作权限,猜测可能在删除后立即创建可能会导致 CreateOrUpdate仍然能获取到删除中的rolebinding.

这里补充下k8s删除资源的策略:

// DeletionPropagation decides if a deletion will propagate to the dependents of

// the object, and how the garbage collector will handle the propagation.

type DeletionPropagation string

const (

// Orphans the dependents.

DeletePropagationOrphan DeletionPropagation = "Orphan"

// Deletes the object from the key-value store, the garbage collector will

// delete the dependents in the background.

DeletePropagationBackground DeletionPropagation = "Background"

// The object exists in the key-value store until the garbage collector

// deletes all the dependents whose ownerReference.blockOwnerDeletion=true

// from the key-value store. API sever will put the "foregroundDeletion"

// finalizer on the object, and sets its deletionTimestamp. This policy is

// cascading, i.e., the dependents will be deleted with Foreground.

DeletePropagationForeground DeletionPropagation = "Foreground"

)Background策略(默认):先删除属主对象,再删除附属对象。 在Background模式下,k8s会立即删除属主对象,之后k8s垃圾收集器会在后台删除其附属对象。

Orphan策略:孤立dependents资源,也就是只删除主对象不删除管理的资源

Foreground策略:该策略是级联的,也就是说,依赖项将与前台一起删除。

这里猜测是否设置为前台删除就能避免立即创建失败的问题,将Delete加上前台删除策略:

if err := r.Delete(ctx, rolebinding, client.PropagationPolicy(metav1.DeletePropagationForeground)); client.IgnoreNotFound(err) != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to delete rolebinding", "Failed to delete rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}经过测试后仍然失败☹️,在社区中搜索到一条类似的问题:

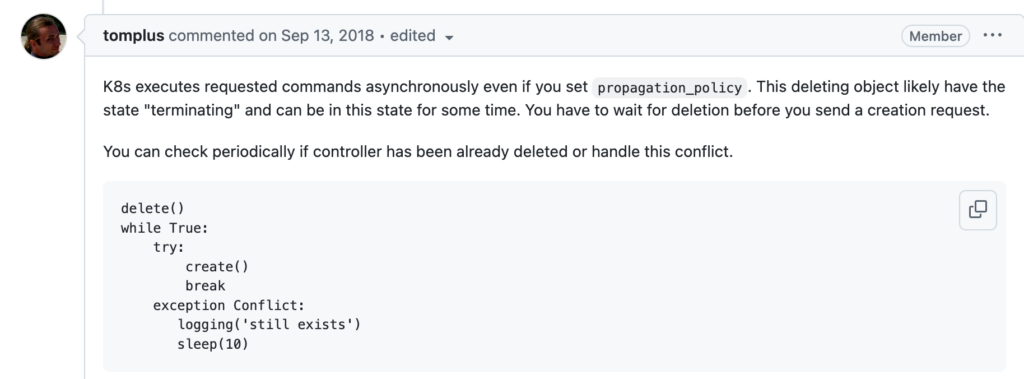

https://github.com/kubernetes-client/python/issues/623#issuecomment-420958580

大致意思是 k8s client的执行操作都是异步执行请求操作,即使执行了delete 也需要一段时间的terminating状态,需要等待小会。

好,果然还是不行的话,我尝试在DeleteOptions中找到有用的参数能同步操作的,于是又发现了另一个参数 GracePeriodSeconds :

// GracePeriodSeconds is the duration in seconds before the object should be

// deleted. Value must be non-negative integer. The value zero indicates

// delete immediately. If this value is nil, the default grace period for the

// specified type will be used.

GracePeriodSeconds *int64顾名思义就是对象被删除前的持续时间,0为立即删除,听起来就是我需要的,于是接着修改代码:

zeroGrace := int64(0)

deleteOptions := &client.DeleteOptions{

GracePeriodSeconds: &zeroGrace,

}

if err := r.Delete(ctx, rolebinding, deleteOptions); client.IgnoreNotFound(err) != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to delete rolebinding", "Failed to delete rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}接着运行后发现果然还是做不到 – -,在仔细研究参数后发现该值留空则为默认宽限时间,然而rolebinding并没有类似于pod(https://kubernetes.io/zh-cn/docs/tutorials/services/pods-and-endpoint-termination-flow/)的该数值,RoleBinding 和 ClusterRoleBinding 都是 非终结型(non-terminating)资源。这类资源与 Pod、Deployment 不同,它们不涉及实际运行的工作负载或进程,因此没有优雅关闭的过程。因此,RoleBinding 默认的 GracePeriodSeconds 是 nil,即它没有优雅关闭时间。这意味着删除 RoleBinding 时,它会立刻被删除,不会有任何延迟。所以也不是这个参数的问题

还是老老实实等待删除后在创建了,接着修改后:

if err := r.Delete(ctx, rolebinding); client.IgnoreNotFound(err) != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to delete rolebinding", "Failed to delete rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}

err := wait.PollUntilContextTimeout(ctx, time.Second, time.Minute, true, func(ct context.Context) (bool, error) {

rb := &rbacv1.RoleBinding{}

err := r.Get(ct, types.NamespacedName{Name: rolebinding.Name, Namespace: rolebinding.Namespace}, rb)

if apierrors.IsNotFound(err) {

return true, nil

}

return false, nil

})

if err != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to delete rolebinding", "Failed to delete rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}

if _, err := ctrl.CreateOrUpdate(ctx, r.Client, rolebinding, setUpOwnerReferenceFc); err != nil {

if rolebinding.RoleRef.Name != string(request.Spec.Role) {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to update rolebinding", "Failed to create rolebinding %s/%s, role: %s", rolebinding.Namespace, rolebinding.Name, request.Spec.Role)

return ctrl.Result{}, err

}

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to create/update rolebinding", "Failed to create rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, ctrl.SetControllerReference(bindUser, rolebinding, r.Scheme)

}这样就可以保证delete & create能成功执行了,但是仍然想找到问题所在,这里突然想起来kube controller 使用的client 都是自带cache的,修改为非cache的方式获取rolebinding应该就可以避免该问题了:

mgr.GetAPIReader().Get(ctx context.Context, key ObjectKey, obj Object, opts ...GetOption) error但其实这里我无需关系是否get到,接着创建就好,因为不会存在删除terminating的情况,于是一波三折,只需要删除创建就好,因为即使多次reconcile触发都会执行删除在创建,所以去除使用CreateOrUpdate :

if err := r.Delete(ctx, rolebinding); client.IgnoreNotFound(err) != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to delete rolebinding", "Failed to delete rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}

if err := r.Create(ctx, rolebinding); err != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to create rolebinding", "Failed to create rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}

if err = setUpOwnerReferenceFc(); err != nil {

r.Recorder.Eventf(request, v1.EventTypeWarning, "Failed to set owner reference", "Failed to set owner reference for rolebinding %s/%s", rolebinding.Namespace, rolebinding.Name)

return ctrl.Result{}, err

}测试后果然,完全没有问题了!,一波三折 最终只需要去掉CreateOrUpdate即可,不过也更加深了理解k8s client的删除策略。

垃圾回收参考官方文档:https://kubernetes.io/docs/concepts/workloads/controllers/garbage-collection/